Ownership – in the Software Development Organization

December 7, 2022

by Tanvi Shah

Originally published on Medium

For any organization to succeed, the most crucial ingredient is trust. When your customers invest in your product, they compose business-critical workflows and sometimes even their ability to keep running reliably around your services.

For an organization to deliver products this critical to their own customers, there has to be a high degree of alignment and team synergy in how teams function. These, in turn, are based on human-to-human interaction, implicit and explicit communication based on a single underlying assumption — that every person in the team is working towards a single unified goal, knows what the larger definition of success looks like, and the part they play in that success.

In simple terms, every individual owns a piece of the product and its problem space, and the orchestration between these pieces results in overall team success. A string of small successes for each individual within the team means a series of milestones and goals are being accomplished with continued regularity, and the team is inching closer and closer to victory.

Ownership is really about taking initiative and understanding that taking action is your responsibility, not someone else’s. It’s the deep understanding that you, as an individual, are accountable for the delivery of an outcome, even where others have a role to play. It means you have to engage and motivate individuals (get the buy-in), set timelines and goals (define milestones and ultimate success), eliminate blockers, reward positive outcomes, analyze and solve / mitigate negative outcomes (operationalize). It means that you care about the outcome to the extent that you would care if you were the owner of the organization. Let’s walk through this using one of the many stability projects we recently undertook to improve product quality at my current company.

The goal

Improve product quality and stability using static code analysis as a tool to reduce the introduction of potential defects.

For the purposes of this discussion, we will define goals and narrow focus to the following :

Why

Early feedback during the software development process is the most under-appreciated but the best ROI tool in the modern software development stack. If developers can recognize and eliminate bugs as they build, their ability to build cleaner, resilient software increases with every line of code they write, even before that code makes its way from their development environments to shared repositories to shipped code.

“The refinement of techniques for the prompt discovery of error serves as well as any other as a hallmark of what we mean by science.” — J. Robert Oppenheimer

Most software engineers would suggest automation and unit testing as the answer to this conundrum. While we cannot discount the benefits of automation, there is a key flaw in automation being the only answer to this problem. Automation only accounts for the lines of code you do write. But what about the necessary but missing lines of code you don’t? (A few key examples to demonstrate this are missing validity and null checks, unhandled memory leaks, missing best practices and so on and so forth).

What

Enter static analysis. Simply put, Static Code Analysis is a method of analyzing the source code of programs without running them. It can discover formatting problems, null pointer dereferencing, and other simple but common and frequent error scenarios.

An interesting data point that would help quantify the severity of the issue and in turn the value of static code analysis in your workflow is that Null dereferencing is a common type of programming error in Java. On Android, NullPointerException (NPE) errors are the largest cause of app crashes on Google Play. Since Java doesn’t provide tools to express and check nullness invariants, developers have to rely on testing and dynamic analysis to improve reliability of their code.

For those unfamiliar with the practice, here’s a quick introduction and primer on what they can do. our vendor of choice for this tool and this initiative was SonarCloud.

Who, where and when

The focus of this initiative was to improve the tooling and in turn enhance the day-to-day experience for developers. We introduced it in our pull request pipelines. The reason for this choice of was two-fold.

The first to provide developers with early feedback about the security, quality, testability, reliability and maintainability of their code incrementally on an on-going change by change basis. This enables them to fix things in a focussed manner, without the added cost of context switching and the pressure of systems failing in production.

The second was to focus code reviews on the more critical aspects of functional and architectural correctness instead of requiring reviewers to focus on reading the code to eliminate obvious issues.

The third effect was better quality builds for internal demos and pre-release testing.

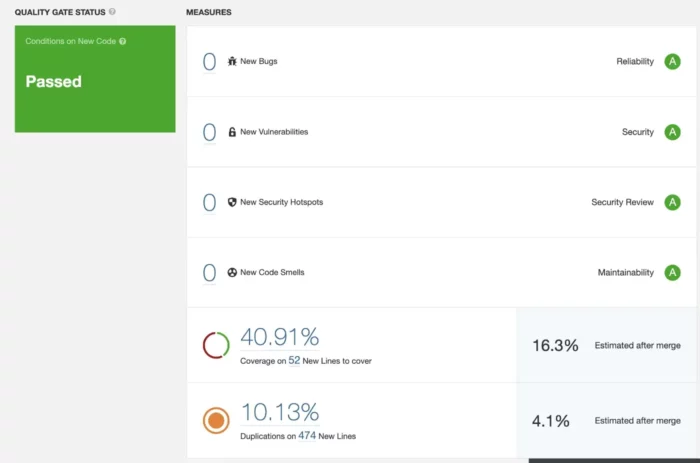

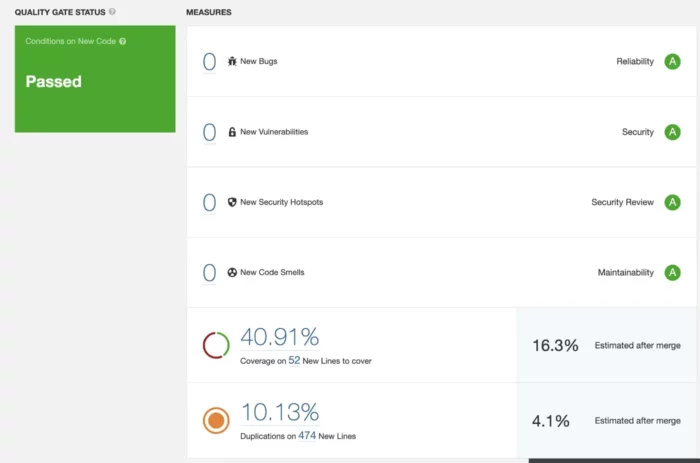

Sample screenshot of a PR report in SonarQube:

With the Quality Gates, one can enforce ratings (reliability, security, security review, and maintainability) based on metrics on overall code and new code. These metrics are part of the default quality gate.

Based on these metrics, a PR scorecard like the above can be a very effective and objective measure of PR preparedness — a much better representation of phase 1 of the “Done state” of a unit of work. In terms of scrum and agile processes, it is priceless for every developer within the organization to have a uniform notion of what “Done” means. This would imply that communications — both explicit and perceived would have an exact meaning and interpretation, across teams, levels and stakeholders. Rolling this up — a cumulative evaluation using an objective system would help evaluate the stages and health of a project, and based on that — the health of company-wide initiatives much better — reflecting in the organization’s overall ability to plan, execute and deliver major company-wide initiatives on time, every time — earning and strengthening their trust bank.

The cost of this approach was the added time of running this analysis on every minor change instead of batched changes.

Operationalizing the initiative

Ground work and Preparation

The first step was to insert and turn on the tooling within the current pipeline to run the initial analysis in order to be able to assess and mark the starting block. In terms of ownership, this meant learning how to setup, integrate and configure the tool, obtain the right permissions and running this analysis on our codebase using the least disruptive path possible. A mistake at this point usually has a negative multiplier effect on developer productivity, and an early negative bias towards the initiative. A successful pilot on the other hand meant deriving the actual number of defects, and the breakdown by team, severity, mitigation and lifecycle. Another key measure to asses the quality of the tool was to go through a good sample of each cell block of defects and make sure we had low to no false positives making it worth the time to add this extra cognitive context and operational effort to every developer’s day to day workflow. The closest analogy that comes to mind is an x-ray scan of your code base.

Identifying the key stakeholders, responsibilities and rewards

Once we had the breakdown, we now had two things to worry about, the first was to prevent or at the very least reduce any more bugs, and the second was to work through existing defects without a hit to their current feature development plans.

For the first problem, we used a moving window solution, meaning allow x number of critical and y number of blocker defects over all new code written over a 30 day period. We closely monitored and kept refining the quality gates over this time window to gradually keep moving towards the zero mark. This made the process far less brutal and chaotic than enforcing the strictest possible levels on day one. As a project owner, it meant the responsibility of monitoring and tuning those gates to stricter levels with zero disruptions at the end of every day fell on me.

For the second problem, engineering leaders on all teams had to define milestones in terms of reduction of the identified backlog and arrive on common ground on the basis of impact and feasibility given other priorities. We agreed to work through all defects in the top two of the five total severity categories. This meant thinly resourced teams needed additional help in terms of developer hours. At times, as the project owner, in extreme cases this meant fixing defects for other teams and collaborating on code review cycles — including implementing and testing review feedback to provide them with the most engaged help and to make sure we aren’t breaking any existing functionality. At one point the experience became so enjoyable, we extended and resolved 25% of the defects in the third severity category as well! It made us deeply discover and leverage the power of the tool and gamifying the entire experience.

The reward was a 30% net reduction (incoming + existing) in defects at a total cost of only 8 developer hours per week over a period of 6 weeks.

Enhance the experience via Continued feedback, impact analysis

Easy on-boarding: We implemented developer on-boarding and off-boarding to be a seamless experience integrated with the same internal SSO security protocols for access to all other development tools.

Seamless assignment, resolution and verification: Triage and assignment to onboarded developers was executed within SonarCloud itself. They can then decide which ones need code fixes, and resolve false positives with appropriate comments within the tool itself. For identified defects, once the scans identify a fix has been made, issues are auto-resolved without manual intervention.

Notifications: Several kinds of update notifications can be sent to the team.

After initial demos to the leadership team, we sent out regular status updates on ongoing work to wrap up missing integrations and the newest bug count reports.

Conclusion

Ownership is about knowing, planning and executing. It involves preparation and research. It is about the willingness to learn, research and risk investing your time and cognitive energy on something you may learn is a dead end.

It is not about authority or knowing all the answers but about demonstrating the desire to continually think ahead, plan, engage your team, manage your costs and knowing where to look in a crisis.

Further suggested reading: https://engineering.fb.com/2022/11/22/developer-tools/meta-java-nullsafe/

TLDR; When it comes to Java, there were multiple attempts to add nullness, starting with JSR-305, but there is a lot of room for improvement. The current landscape for great static analysis tools has plenty of worthy contenders like Java CheckerFramework, SpotBugs, ErrorProne, and NullAway, to name a few. In particular, Uber walked the same path by making their Android codebase null-safe using NullAway checker. However, all the checkers perform nullness analysis in different and subtly incompatible ways — requiring careful consideration and detailed feasibility analysis before arriving at and implementing the tool of choice. The lack of standard annotations with precise semantics has constrained the use of static analysis for Java throughout the industry.

This problem is exactly what the JSpecify workgroup — comprising of individuals from a wide spectrum of Java developers from across the big names in tech was started in 2019 — aims to address.